Publications & Research

My research focuses on vision-based robot learning and its applications in navigation, manipulation, and computational design.

Latest Work

Jacta: A Versatile Planner for Learning Dexterous and Whole-body Manipulation

Jan Brüdigam, Ali Adeeb Abbas, Maks Sorokin, Kuan Fang, Brandon Hung, Maya Guru, Stefan Sosnowski, Jiuguang Wang, Sandra Hirche, Simon Le Cleac'h

IEEE Robotics and Automation Letters (RA-L) 2024

We combined reinforcement learning with sampling-based algorithms to solve contact-rich manipulation tasks. While sampling-based planners can quickly find successful trajectories for complex manipulation tasks, the solutions often lack robustness. We leveraged a reinforcement learning algorithm to enhance the robustness of a set of planner demonstrations, distilling them into a single policy that can handle variations and uncertainties in real-world scenarios.

Publications

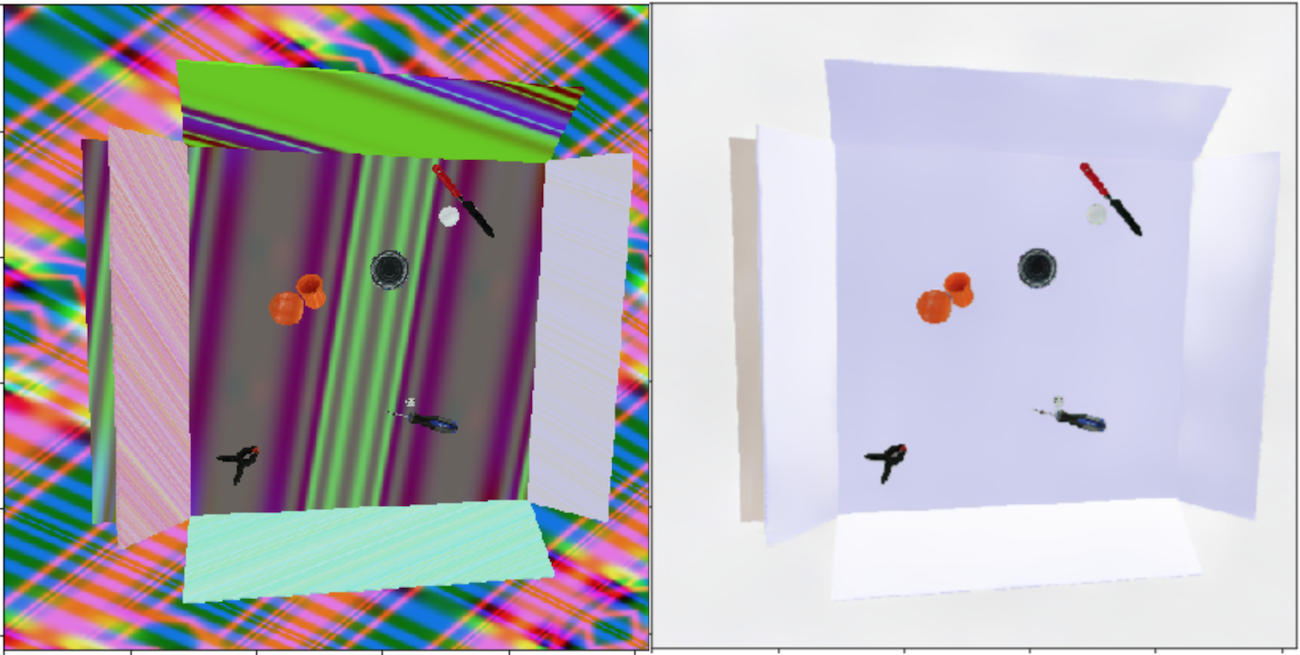

On Designing a Learning Robot: Improving Morphology for Enhanced Task Performance and Learning

Maks Sorokin, Chuyuan Fu, Jie Tan, C. Karen Liu, Yunfei Bai, Wenlong Lu, Sehoon Ha, Mohi Khansari

International Conference on Intelligent Robots and Systems (IROS) 2023

We present a learning-oriented morphology optimization framework that accounts for the interplay between the robot's morphology, onboard perception abilities, and their interaction in different tasks. We find that morphologies optimized holistically improve the robot performance by 15-20% on various manipulation tasks, and require 25x less data to match human-expert made morphology performance.

Human Motion Control of Quadrupedal Robots using Deep Reinforcement Learning

Sunwoo Kim, Maks Sorokin, Jehee Lee, Sehoon Ha

Proceedings of Robotics: Science and Systems (RSS) 2022

We propose a novel motion control system that allows a human user to operate various motor tasks seamlessly on a quadrupedal robot. Using our system, a user can execute a variety of motor tasks, including standing, sitting, tilting, manipulating, walking, and turning, on simulated and real quadrupeds.

Relax, it doesn't matter how you get there!

Mehdi Azabou, Michael Mendelson, Maks Sorokin, Shantanu Thakoor, Nauman Ahad, Carolina Urzay, Eva L Dyer

Neural Information Processing Systems (NeurIPS) 2023 - Spotlight

We introduce Bootstrap Across Multiple Scales (BAMS), a multi-scale self-supervised representation learning model for behavior analysis. We combine a pooling module that aggregates features extracted over encoders with different temporal receptive fields, and design latent objectives to bootstrap the representations in each respective space to encourage disentanglement across different timescales.

Learning to Navigate Sidewalks in Outdoor Environments

Maks Sorokin, Jie Tan, C. Karen Liu, Sehoon Ha

IEEE Robotics and Automation Letters (RA-L) 2022

We design a system which enables zero-shot vision-based policy transfer to the real-world outdoor environments for sidewalk navigation task. Our approach is evaluated on a quadrupedal robot navigating sidewalks in the real world walking 3.2 kilometers with a limited number of human interventions.

Learning Human Search Behavior from Egocentric View

Maks Sorokin, Wenhao Yu, Sehoon Ha, C. Karen Liu

EUROGRAPHICS 2021

We train vision-based agent to perform object searching in photorealistic 3D scene. And propose a motion synthesis mechanism for head motion re-targeting. Using which we enable object searching behaviour with animated human character (PFNN/NSM).

A Few Shot Adaptation of Visual Navigation Skills to New Observations using Meta-Learning

Qian Luo, Maks Sorokin, Sehoon Ha

The IEEE International Conference on Robotics and Automation (ICRA) 2021

We show how vision-based navigation agents can be trained to adapt to new sensor configurations with only three shots of experience. Rapid adaptation is achieved by introducing a bottleneck between perception and control networks, and through the perception component's meta-adaptation.